IP-fabric network configuration in VMmanager helps to save public IP addresses and create isolated networks

Last fall, our virtualization platform was supplemented with a new network configuration type - BGP-based IP-fabric. We have already written about the benefits of this technology for isolating private networks and saving IP addresses. This time, Alexander Grishin, VMmanager Product Owner, will explain in more detail how IP-fabric works and how to use it in a cluster.

IP-fabric allows using the public network of a virtual machine or container over the company's local network. This configuration ensures that the service is abstracted from internal infrastructure.

Here, I will explain the features of its implementation and show an example of IP-fabric configuration.

IP-fabric: configuration in VMmanager

Glossary

BGP — dynamic routing protocol. It is responsible for exchange of routes between nodes and network equipment of the company.

Node — a physical server on which virtual machines and containers are deployed.

Core or Border Gateway — the device through which routing is performed

Bird service — the software which implements the BGP protocol in Linux.

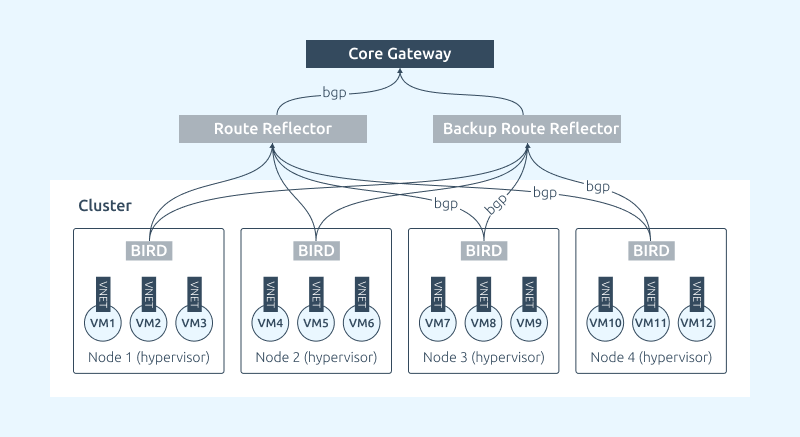

Route Reflector (RR) — a device that receives routes from nodes and transmits them to the Core/Border Gateway via the BGP protocol.

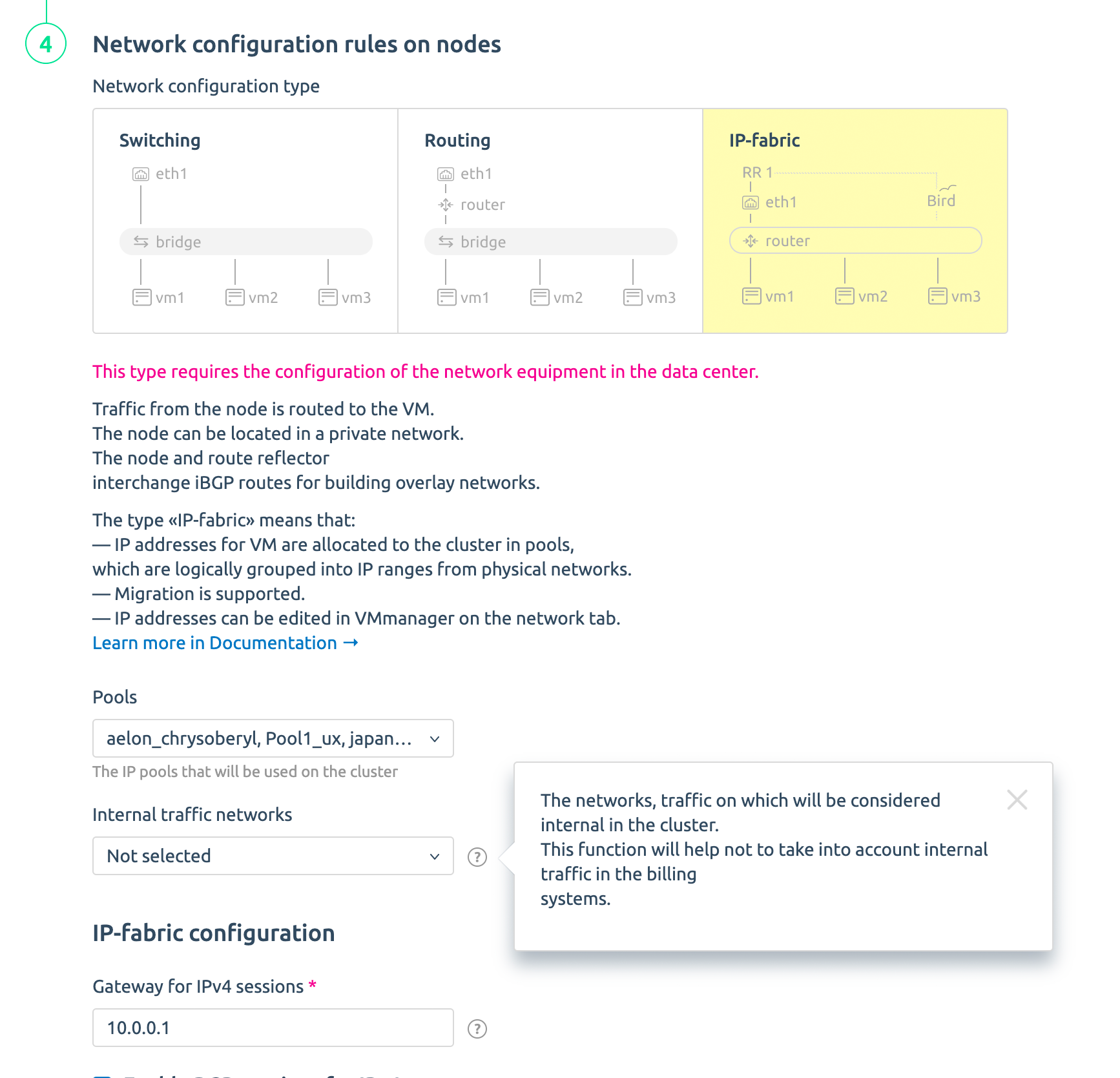

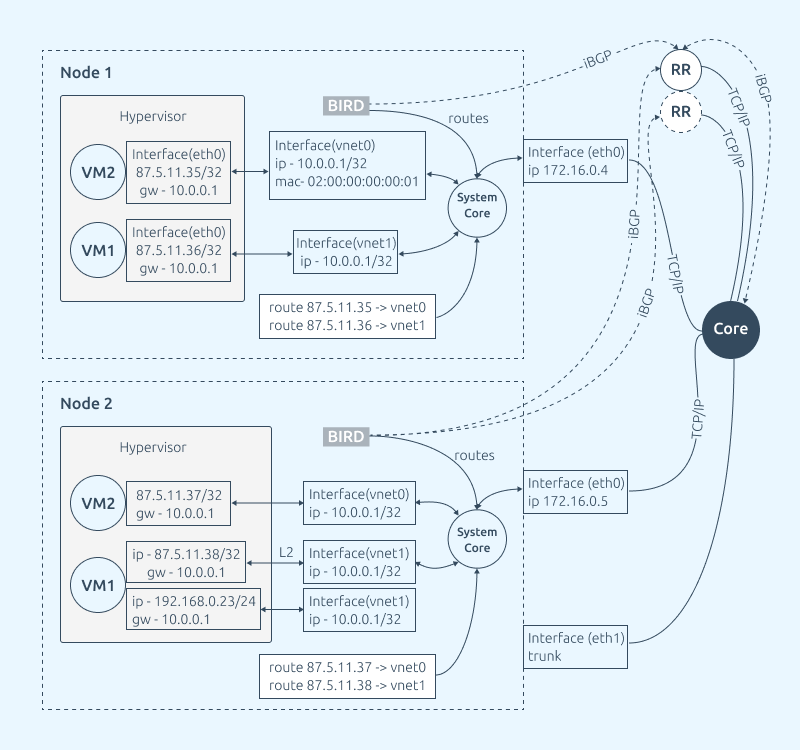

By IP-fabric we mean one of the network configurations on nodes in VMmanager. The configuration applies to the entire cluster. This is digitized knowledge of our network engineers packaged in a product. In fact, here we have several levels:

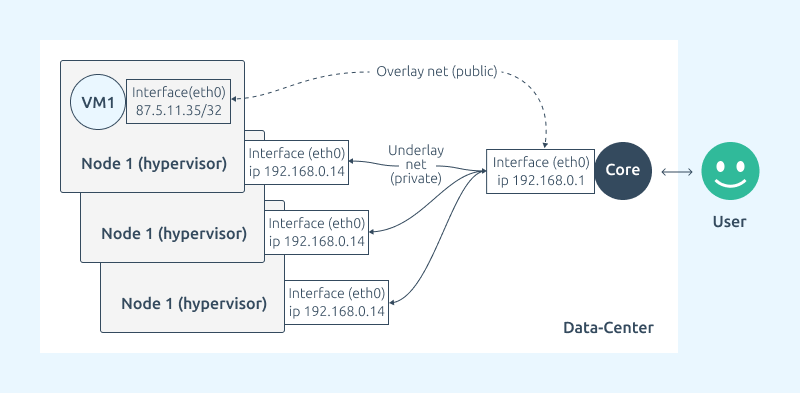

- Underlay level — a usual flat local network for nodes. It can be accessed by certain specialists of the company. This is the company's secure closed circuit.

- Overlay level has the size of /32, a public IP address and is created for each virtual machine or container. This is a point-to-point connection from the router to a specific virtual interface on the hypervisor. Routing to this interface is via BGP. VM and container addresses are announced towards the network. This allows the user to access his virtual machine or container from a public address.

IP-fabric functionality is configured and used at the cluster level and applies to all nodes in the cluster. Let us look at some technical features of this cluster configuration:

- No linux-bridge is used on the nodes;

- The default gateway for each virtual machine is a separate virtual interface on the node (vnet);

- All these virtual interfaces have the same IP address and mac address;

- Nodes act as routers, and despite the same IP and mac address of the interface, they know exactly for which interface a particular packet is intended;

- VMmanager automatically installs, configures and maintains Bird service on each node;

- You can use the RR role to exchange routes between the node and the router. This allows you to avoid having to reconfigure the router "hot" when adding nodes to the cluster;

- You need to configure neighborhood between RR and each cluster node;

- The neighborhood between the RR and the router is configured once. There is no need to reconfigure the router when new nodes are added;

- Multiple RRs per cluster can be used to provide unbreakability;

- It is technically possible not to use the RR role and immediately "neighbor" the router. This is justified for a test or lab bench in the sandbox. However, this scheme is not recommended in production - when adding new nodes to the cluster you will have to configure the Border Gateway/Core each time... Which means, in the case of an error, you can crash the entire network of the company.

A safer option is to configure the intermediate role, RR, once and then configure the neighborhood between it and the newly added nodes.

When a new virtual machine or container is created in a cluster, the following happens:

- The VM or container is deployed on the node;

- Routing is configured on the node;

- The Bird service is configured on the node;

- Bird announces a new route to the virtual machine for Route Reflector via BGP;

- Route Reflector transmits this route via BGP further, towards the Core (Border Gateway);

- Core (Border Gateway) receives route information to the new virtual machine and can handle its traffic in both directions.

RR itself does not take part in routing traffic. It serves only as a BGP intermediary between nodes and Border Gateway/Core, therefore it is not too demanding for resources.

Now that it is clear how everything works, it is time to tell how this will benefit the company. It turns out that IP-fabric covers several company needs at once:

- Savings. IP-fabric saves public addresses. The larger the company's infrastructure, the greater the savings.

- Better security. The company's infrastructure is essentially in a closed loop, isolated from clients. Meanwhile, all the clients are isolated from each other.

- Higher client satisfaction. Thanks to excluding client's broadcast traffic, network performance increases several times over. This has a positive effect on satisfaction with the service, because everyone loves it when the network works fast.

It is worth noting that some companies use VLAN to solve these problems. However, IP-fabric offers scalability advantages and the possibility of more flexible customization to client needs.

IP-fabric configuration option

Let us review a simple way to configure IP-fabric in an infrastructure consisting of a cluster of servers for virtualization. The role of Border Gateway is performed by a Juniper MX router. Several physical servers running on Linux will function as Route Reflectors.

We will need:

- Server to install the VMmanager 6 platform;

- One or more servers as hypervisors with CentOS 8 for KVM or Ubuntu 20.04 for LXD;

- One or two Linux servers for the RR role;

- Ability to configure BGP sessions on the Juniper MX side;

- Data on BGP standalone system for VMmanager and Core Gateway nodes;

- IP address pool for virtual machines

Configuration of IP-fabric requires three steps:

- Router configuration;

- Server configuration as RR;

- VMmanager configuration.

Router configuration algorithm

Let us configure neighborhood between the router and the RR. Connect the RR to the Juniper MX router:

- You must already have BGP set up with your provider before configuring.

- Replace the variables

- Add a new filter:

- Add a new group to config:

- Check and apply the configuration:

- If within 5 minutes the commit request is not sent, the router settings will be automatically reset.

- Check that RR has sent us the routes (we are interested in the line Accepted prefixes)

- If everything is OK, then confirm the configuration

{{ AS }} — AS

{{ filter }} — list of networks that we receive from VMmanager side, as well as those we pass onto the router

{{ rr_ip }} — IP address of the RR, which is used for peering

set policy-options policy-statement VM term isp-ipv4 from protocol bgp

set policy-options policy-statement VM term isp-ipv4 from route-filter {{ filter }} orlonger

set policy-options policy-statement VM term isp-ipv4 then accept

set policy-options policy-statement VM then reject

set policy-options policy-statement reject-all then reject

set protocols bgp group VM import VM

set protocols bgp group VM export reject-all

set protocols bgp group VM peer-as {{ AS }}

set protocols bgp group VM neighbor {{ rr_ip }}

commit check

commit confirmed 5

show bgp group VM detail

commit

RR configuration algorithm

Now let us configure neighborhood between the router and the RR, as well as between the RR and the future nodes of the virtualization cluster.

- Install Bird on the server.

- Before configuring, we need to prepare the following information:

- Write the config /etc/bird/bird.conf.

- Reboot bird: systemctl restart bird.

- Open console with the command birdc

- Check that we have received the list of routes to VDS from the node:

- If the routes are not received, check the log file /var/log/bird.log

{{ filter }} — ist of networks that we receive from VMmanager side, as well as those we pass onto the router;;

{{ local_ip }} — P address of RR, which is used for peering, both from VMmanager and from the router side;

{{ bird.as }} — AS;

{{ bird.community }} — community;

{{ route.name }} — router signature;

{{ router.ip }} — IP address of the router, which is used for peering;

{{ neighbor }} — description for VMmanager node;

{{ neighbor_ip }} — IP address of VMmanager node for peering.

log syslog { debug, trace, info, remote, warning, error, auth, fatal, bug };

log "/var/log/bird.log" all;

log stderr all;

router id {{ local_ip }};

protocol kernel {

learn;

scan time 20;

import none;

export none;

}

function avoid_martians()

prefix set martians;

{

martians = [ 169.254.0.0/16+, 172.16.0.0/12+, 192.168.0.0/16+, 10.0.0.0/8+, 224.0.0.0/4+, 240.0.0.0/4+, 0.0.0.0/0{0,7} ];

# Avoid RFC1918 and similar networks

if net ~ martians then return false;

return true;

}

filter ibgp_policy_out

prefix set pref_isp_out;

{

pref_isp_out = [ {{ filter }} ];

if ( net ~ pref_isp_out ) then

{

bgp_origin = 0;

bgp_community = -empty-;

bgp_community.add(({{ bird.as }},{{ bird.community }}));

accept "VPS prefix accepted: ",net;

}

reject;

}

filter ibgp_policy_in

prefix set pref_isp_in;

{

if ! (avoid_martians()) then reject "prefix is martians - REJECTING ", net;

pref_isp_in = [ {{ filter }} ];

if ( net ~ pref_isp_in ) then

{

accept "VPS remote prefix accepted: ",net;

}

reject;

}

protocol device {

scan time 60;

}

protocol bgp router {

description "{{ route.name }}";

local as {{ bird.as }};

neighbor {{ router.ip }} as {{ bird.as }};

default bgp_med 0;

source address {{ local_ip }};

import filter none;

export where source=RTS_BGP;

export filter ibgp_policy_out;

rr client;

rr cluster id {{ local_ip }};

}

protocol bgp node1 {

description "{{ neighbor }}";

local as {{ bird.as }};

multihop 255;

neighbor {{ neighbor_ip }} as {{ bird.as }};

source address {{ local_ip }};

import filter ibgp_policy_in;

export none;

rr client;

rr cluster id {{ local_ip }};

}

show route all protocol node1

Important: If in the future it will be necessary to add new nodes in the cluster, you will need to edit the RR settings.

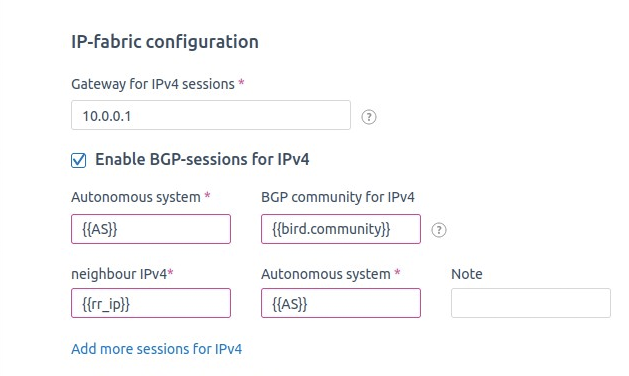

IP-fabric configuration algorithm in the VMmanager platform

The last step is to configure the network configurations in the product interface.

- Install VMmanager on the server;

- In VMmanager 6, create a cluster with IP-fabric network type;

- Specify the information for communication with the Core Gateway via BGP;

- Specify the information to connect to the first RR

- Enter the Networks section and specify the information about networks and IP address pools;

- Connect servers to be used as hypervisors to the cluster;

- Create a VM or a container.

When creating an IP-fabric cluster, the platform offers IP and MAC addresses for the default gateway to be configured on VMs and containers. This gateway is the virtual vnet interface on the node.

The rest of the AS parameters, BGP-community (bird.community) and Route Reflectors addresses are the same as we used to configure the router and RR.

That's it, now we have a cluster in VMmanager providing virtual infrastructure to the client using IP-fabric.

Summary of way forward

This year we are planning to develop IP-fabric into a virtual IaaS. End users will be able to get not just a virtual machine or container, but a scope with an unlimited number of isolated private networks. VXLAN and EVPN technologies will help to implement this functionality, but I will talk about this in a future article.

How to try IP-fabric

I invite you to share your opinion in the comments and give IP-fabric in VMmanager a try.

Acknowledgments

Thank you for your help in writing this article, and also for testing and trial-running IP-fabric beta version in VMmanager:

Ilya Kalinichenko, DevOps Engineer at ISPsystem;

Stas Titov, Network Architect at FirstVDS;

Oleg Sirotkin, System Architect at G-core labs.